To many in higher education they see problems as nails and testing as the only hammer in our kit. Yet we stand at a time where we can re-mediate assessment using new technologies and old definitions of what it means to learn.

I serve on the Tech Fluency (TF) affinity group at Southern Connecticut State College. TF is a tier one competency in our liberal education program (our general ed program but with more hoops and loftier goals). The goals is to ensure all students have the minimum tech skills they will require after college. In our current (permanent) budget crisis we have been asked to review the effectiveness of LEP.

We developed a series of rubrics instructors could use in their classroom. The newly appointed LEP assessment committee decide our approach was “too subjective.” They suggested a common task, filling out a spreadsheet was their recommendation, that students could complete in a controlled and supervised environment.

This set me off. I responded (probably with not enough to delay and too much acerbic snark) with some of the following comments.

Objective Assessments are a Hoax

Subjective flickr photo by EVRT Studio shared under a Creative Commons (BY-NC-ND) license \

I am not anti-testing. Most of my research revolves around item design and testing. Yet I think what set me off was the belief that some assessments are objective. Those who rely on standard measures ignore the bias inherent in statistical models and deciding as “what counts as learning.” They look at rubric scored items as being “too subjective” yet ignore the error variance, the noise in their models. I say bring the noise. It is in outliers where we see interesting methods and learning.

Technology Assessment Lack Ecological Validity

We were asked to create a shared assessment. It’s just the task sounds like 1996 wants their Computer Applications textbook back. We have to move beyond, “These kids don’t know spreadsheets” as the only critique in our self-assessment. There is so much more in the competencies beyond the basics of Excel.

The idea that you do anything in tech under supervision and sitting alone in a crowded room is the wrong approach to assessment. What we are calling cheating will be required collaboration for anyone doing any thing with tech in any field.

This is why I think a digital credentialing platform is the correct path forward. If you begin by mapping pathways and rubrics similar to ours or better yet even more fine grained criteria we could develop a system where faculty still had the freedom to design (hopefully co-design) a pathway for students.

Students Should Drive Assessment

I think we should involve the students as stakeholders to a much higher degree in any assessment.We do this by helping stduents tell their story. This also shifts responsibility onto them to build the data trails we need.

Purpose of Assessment

I also took issue with the the purpose of our assessment. If the goal is to evaluate the effectiveness of our LEP program then why is our gut reaction to assess each student individually.

Its not that the approach of of a learning artifact (the spreadsheet assessment we were asked to develop) is the wrong path in terms of overall measurement.

Let us as faculty assess the individual and let machines surface the patterns at class, school, and system level.

Technological Solutions

I think the Academy long term should push off most system wide assessment onto machines. Its way more effective and correlates so highly with well trained human raters.

If we scored could score a batch with high inter-rater reliability once, and laser honed the criteria, much of this could be machine scored and credentialed with minimum faculty involvement. Faculty could build whatever assessments they wanted to on top of the task. It really wouldn’t matter to LEP assessment.

This isn’t fantasy. It’s usually $7-10 a user (for the scoring).

Moving Forward

All measurement and all grades are subjective. Yet I think we have a chance to rethink the academy by empowering learners through assessment. Its time to kill the Carnegie Credit hour.

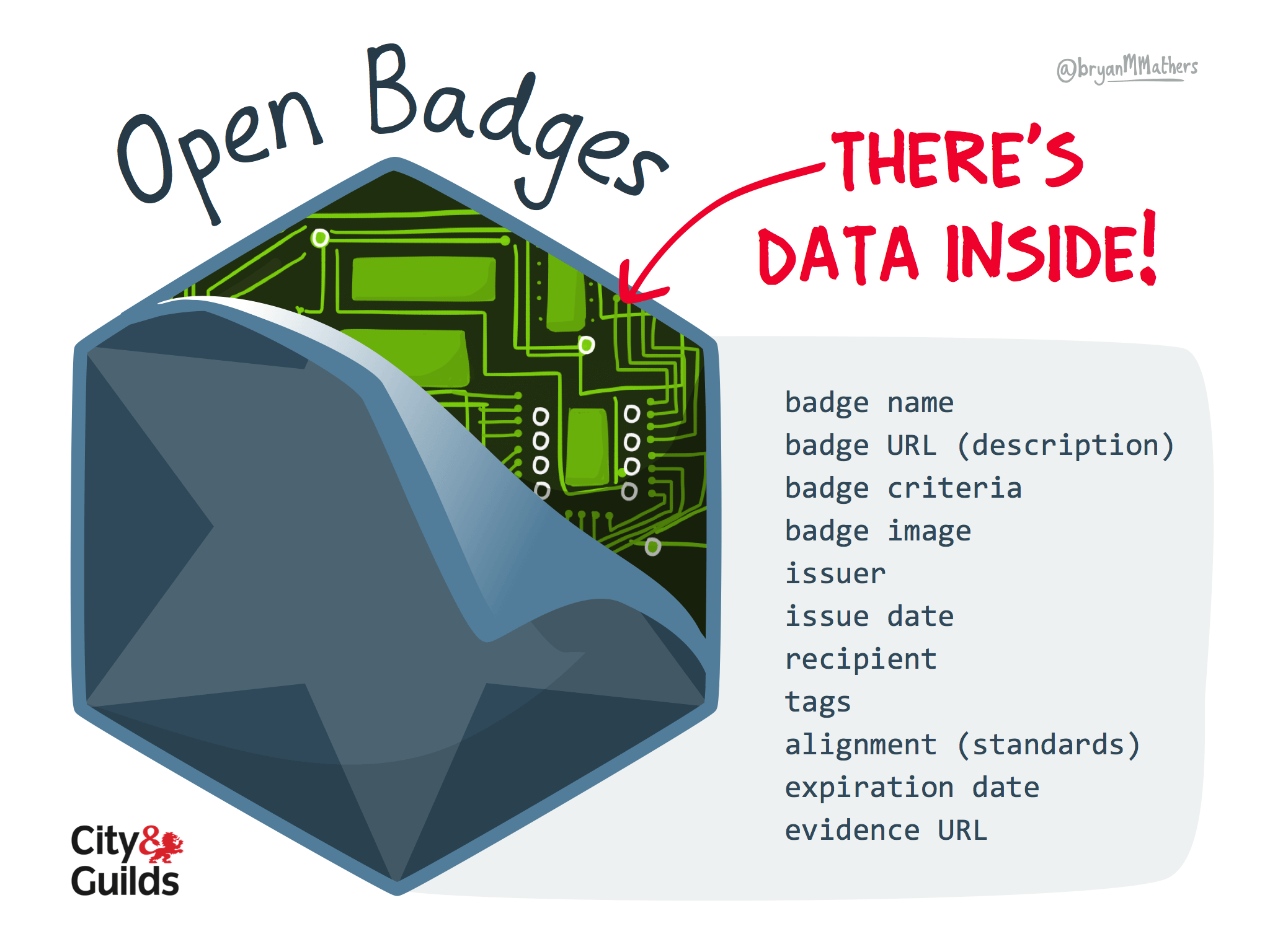

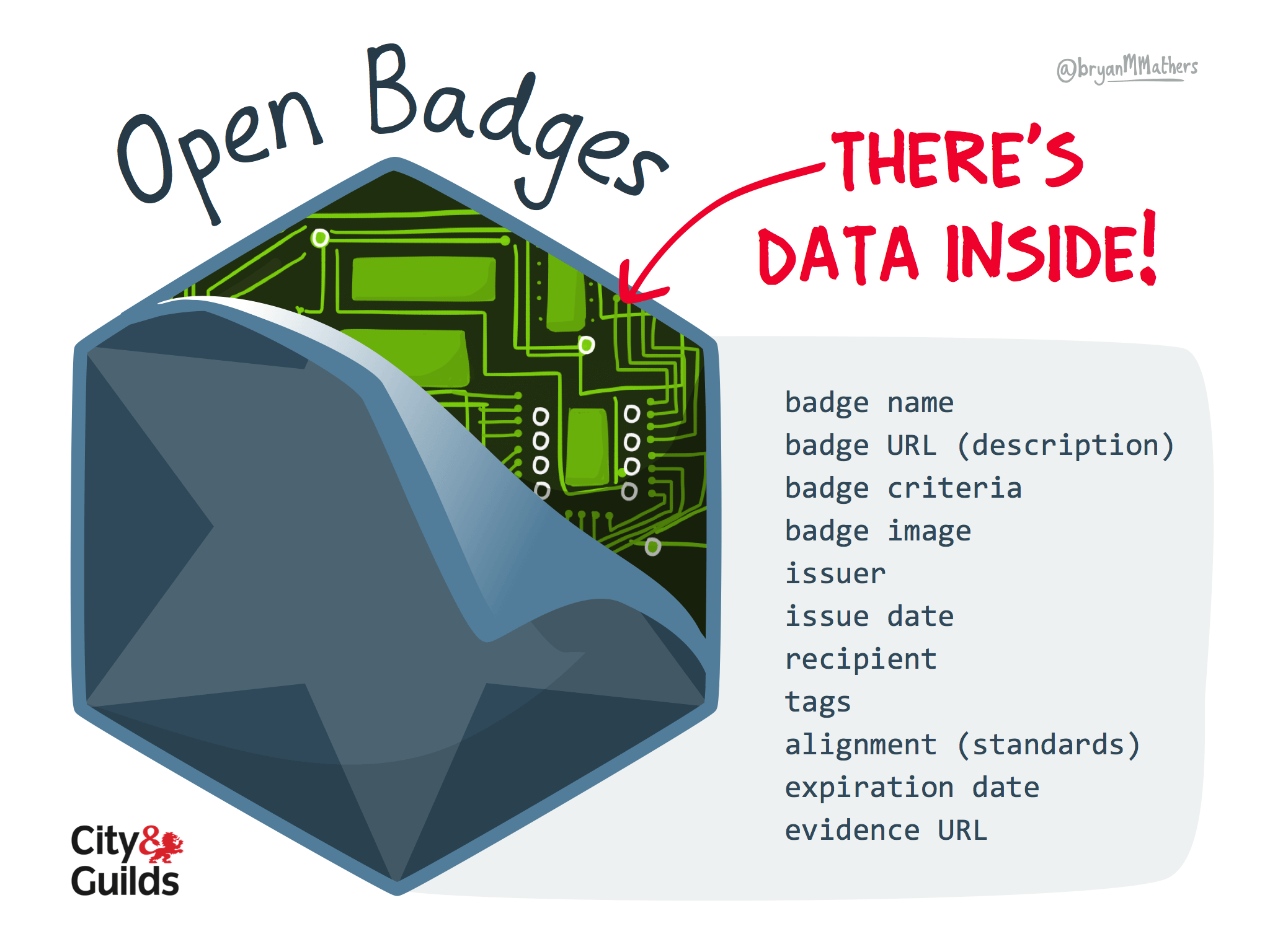

In fact across the state of Connecticut we have been discussing how to seamlessly transfer students between seventeen community colleges and four universities. Plus students would like to receive credit for work that would demonstrate competencies in our Tier One classes. If we really wanted to think about Transfer Articulation we would forget about tracking credit hours and think of each

student as an API. If we had the matching criteria, or even a crosswalk of offerings, it would be a matter of plug and playing the assertions built into our credentialing platform.

This would also allow students to apply previous work they completed in high school or outside of school and get credit for meeting the technology fluency competencies. We can use the

new endorsement feature in the

Badge 2.0 specification so local schools, computer clubs, or even boot camps could vouch for the independence of student work. The

learning analytics can help us with our programmatic review and tracking student knowledge growth.

Some Examples